On the Road to the Brainships:

A Look at the Current Science of Interfacing the Brain

by Tedd Roberts

There are many science fiction (SF) stories in which the characters have some form of direct brain-to-computer connection, but none quite rivals the complete brain-to-starship interface of Anne McCaffrey's The Ship Who Sang and the subsequent collaborative novels. To mark this month's rerelease of The Ship Who Searched by McCaffrey and coauthor Mercedes Lackey, Baen Books has asked me to reflect on brain and machine connections in science fiction compared to the present-day neuroscience of brain interfaces. When I started my study of Neuroscience thirty years ago, my goal was to work in the field of bionics as envisioned in Martin Caidin's Cyborg novels (also known as The Six Million Dollar Man TV series). Little did I know that such a field did not exist at that time; likewise, little did I imagine that I would play a role in creating it!

Brain-to-machine, or brain-to-computer links in SF take many forms – from the full-body interfaces of the Brain Ships, to "simple" control interfaces as in the computer input device imagined by James Hogan in The Genesis Machine. Since it can be argued that all such interfaces require some form of electronic or computerized "decoder" to interpret brain signals and convert them to machine instructions or controls, we'll use the generic term "Brain-Computer Interface" (BCI) here, even though there are additional body-to-computer and instrumentation-to-body connections implied by many of the devices and stories discussed below.

From the start, it is very important to understand that most neuron (i.e. brain cell) activity is electrical. There is some chemical activity in there, too, but what we can most easily work with in terms of brain interfaces is the electrical activity. Since electrical technology is well within our current technological capabilities, we can assume the ability to both "read" and "write" neuron signals with the BCIs we'll discuss here (and get back to a discussion of the mechanism later). We'll first discuss types and examples of BCIs, then the current state-of-the-art in brain interfaces before moving on to use of these concepts in SF, and the future of both science and science fiction.

Types of Brain Interfaces

For my own purposes, I define three functional types of brain interfaces: Input, Output and Internal. Input Interfaces are those designed to bring information about the outside world to the brain, bypassing the respective sensory system. Frankly, any means of interacting with our environment is an "interface" –- so Braille type and sign language are "Input Interfaces" that allow visual or auditory information, respectively, to reach the brain via pathways other than the original visual or auditory mode. However, since we are specifically discussing direct interfaces, this section will focus on the state of the art examples of sensory replacement, i.e. cochlear implants and retinal implants, and then look into some current research into devices that directly connect to the brain.

Output Interfaces are those which allow brain signals to directly control a device either attached to, or separate from our bodies. On the low-tech side, mechanical legs and myoelectric prosthetic limbs (basically the claw-style prosthetic arm triggered by flexing back and shoulder muscles) have been around for decades. In the case of artificial legs, it's become pretty high art; however, these mechanical devices are not directly brain-controlled. We'll cover the latest science in brainwave and direct neural interfaces to control advanced prosthetic limbs.

Finally, brain-to-brain or Internal Interfaces incorporate those devices that do not connect outside the brain, but are specifically designed to replace or repair specific damaged areas of the brain. Interesting applications to enhance brain function will also be discussed in that section.

Input Interface BCIs

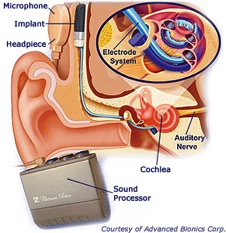

The cochlear implant dates from research at multiple institutions in the 1970s, and consists of a computerized sound processor linked to a long electrode implanted into the cochlea –- the primary sensory organ for sound. The cochlea is much like a coiled pipe or a seashell. The structure is "tuned" to respond to different sound frequencies, or pitches, at different distances along the "pipe." Neurons (in this case, sensory cells rather than generic brain cells) located at various distances along the cochlea are thus likewise "tuned" to respond to different frequencies of sound. The electrode of a cochlear implant is implanted lengthwise through the cochlea, so that different locations along the electrode can deliver precise electrical signals to the neurons at that point in the cochlea. The sound processor of a cochlear implant converts different types of sounds to a pattern of electrical signals distributed by time and space (distance along the cochlea) allowing the information about sound qualities to be picked up by the rest of the ear and brain's normal systems for processing sounds.

Cochlear Implant

Image courtesy of Advanced Bionics Corporation

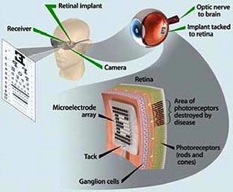

Retinal implants work in a similar manner, in which video from a camera is processed into "pixels" which in turn are used to stimulate electrode sites placed against the back of the eye. Like the cochlear implant, a retina implant is designed not to replace the eye, but to activate the retinal tissue that remains after diseases such as retinitis pigmentosa or macular degeneration have damaged the light-sensitive cells of the eye. Development of retinal implants has been ongoing since the 1990s, and as of today (2013) they are capable of mimicking up to 100 discrete "pixels," allowing patients to read (slowly – one letter at a time) and to see object shapes by contrast and edges. New electrode designs of up to 1000 pixels will be available within 5 years.

The Argus II retinal prosthesis

Image courtesy of Second Sight

While tens of thousands of patients have received cochlear implants, only a few dozen have been tested with retinal implants. Still, these are not the fully cybernetic "bionic ear" or "bionic eye" we may have expected. Such an interface requires connection directly to the part of the brain that decodes the signals from the retina or cochlea. We're still a long way from McCaffrey's Brain Ships or Caidin's Six Million Dollar Man. Prosthetic devices cannot yet be fully integrated with brain inputs, nor can they completely replace the role of the natural sensory organs. In many ways the science of "bionics," or more accurately neural prosthetics, is still quite primitive and will remain so until several very important factors are resolved.

These problems are, in no particular order, complexity of the signals that have to be interfaced with the brain, longevity of the electrodes then need to interface with the brain, complexity of the prosthetic (such as number of pixels in the visual field, or the multitude of movement possible at a single joint), powering the prosthetic, and weight of the prosthetic.

The issue of complexity is made rather obvious from the fact that current visual and auditory prosthetics are unable to render the rich diversity of sight and sound –- however this is primarily an issue for density of receptor and stimulation electrode.

While research is ongoing to develop means of putting signals into the brain, it is still much easier to read the signals coming from the brain. Both techniques require that scientists record and understand the signals and the "code" the brain uses to represent sight and sound. However, when it comes to artificial limbs, there are factors that must be considered that elevate the complexity of the code to many new dimensions: how many joints, the directions those joints can move, movement of joints in synchrony, and coordinating all of those movements. There is also the problem of appropriate electrodes to interface a prosthetic with the brain. While we as neuroscientists think we may have a good handle on the problem, we still don't have a reliable ability to insert electrical signals into the brain for a fully functional "bionic" eye or ear. As necessary as it would seem to provide prosthetics for vision and hearing, it is now even more important to repair or replace the sense of touch to allow for fully function prosthetic limbs... but more on that in the next section.

Output Interface BCIs

Some rather astounding work has been accomplished in the past five years toward developing a brain operated upper limb prosthetic –- in other words, a bionic arm. The defense research agency DARPA is considered by many to essentially fund "science fiction." DARPA funds a project called Revolutionizing Prosthetics to develop a brain interfaced upper arm prosthetic, essentially similar to the Six Million Dollar Man prosthetic.

One of the principal goals of this development was complex motions such as flexing fingers and rotating the wrist – with a device that was no heavier than a limb made of flesh and blood. Starting in 2007, the original project funded a company by the name of DEKA, founded by Dean Kamen, inventor of the Segway. It was quite successful, and resulted in a follow-up program which began in 2009, to begin the first tests of interfacing the prosthetic with the brain. As of 2011, patients with brain-implanted electrodes were controlling limbs mounted to a supporting framework in the lab (Brown University and University of Pittsburgh). The limbs were not yet wearable; however, in 2013, the first patients will be fitted with wearable artificial limbs controlled directly by signals from the brain. This is a multi-institutional research project, spearheaded by Johns Hopkins University, and involving at least ten other universities and a similar number of foundations and private companies. For a short summary of the program and many of the reports from DARPA, click here and use search keywords "revolutionizing prosthetics.”

One question that may be asked is: "Why concentrate on just an upper limb or arm and hand prosthetic, and not a lower limb leg and foot prosthetic?" A major reason is that at present brain control is not necessary for effective prosthetics for lower limbs. Biomechanical devices that utilize springs, latches and pistons are quite capable of mimicking lower limb function provided some residual portion of the leg below the hip is present. (Note that even these biomechanical limbs still meet the definition of "bionic" or "lifelike" as first defined by Dr. Jack E. Steele, who coined the term in the 1950s.) The next revolution in lower limb prosthetics will be to provide self motivating legs to replace total amputation from the hip. However, the complex motions required to lift, twist, handle, and manipulate objects as required by fingers, wrists, and elbows has placed the current emphasis for bioelectronic prosthetics on the upper limb.

Even without direct control of a bionic limb, there is continued need for "output" BCIs to control external devices. We start to come very close to the situation of McCaffrey "shellpersons" when we consider patients with "locked-in" syndrome. Researchers at Brown University have developed the "BrainGate" BCI that potentially allows control of many different devices –- from bionic limbs, to power chairs to computers. The BrainGate uses implanted electrodes (see BCI Electrodes below) to control a set of on-off switches –- many on-off switches! –- which can then be translated to machine controls. Similar techniques can be applied using EEG, but implanted electrodes allow greater understanding of complex codes, as well as greater precision in recording those codes. Much of the work with output BCIs requires the recording of neural activity to be processed and/or modeled by computer, so much of this work is being done simultaneously in animals and humans to better design the BCIs for eventual human use.

With all of the wonderful progress that's being made on prosthetics with this program, a major hurdle is still providing sensory feedback from the limb to the brain. In many ways this becomes a problem very similar to advanced visual and auditory prosthetics. Several of the teams funded by the Revolutionizing Prosthetics program are working on precisely the issue of providing both tactile and proprioceptive feedback to the sensory cortex (“proprioceptive” means the sensory perception of motion found chiefly in muscles, tendons, joints, and the inner ear). However, as I said, direct sensory input to the brain requires developing interface techniques that have lagged behind the output techniques. Fortunately, the more recent developments in Internal BCIs are providing results applicable to both Input and Output BCIs.

Internal BCIs

While "brain-to-brain" interfaces may sound like something Commander Spock should do on Star Trek; in prosthetic terms, a brain-to-brain, or Internal BCI is simply a device which has both its input and output in the brain. There is much less development directed at Internal BCIs, mainly because they require a much more esoteric knowledge of the "codes" used by different regions of the brain. Nevertheless, Internal BCIs are extremely important as prosthetics, since they may eventually allow "replacement parts for the brain" as one of my colleagues likes to claim.

The most advanced work in this field has just been reported over the past eighteen months by researchers at the University of Southern California and Wake Forest Baptist Medical Center (as reported in Journal of Engineering -- Volume 9, page 056012, 2012 and Vol. 8, page 046017, 2011). My colleagues have built on thirty years' study of the hippocampus, an area of the brain responsible for recording memory, and developed techniques that will eventually result in the ability to restore functions that have been lost due to age, disease or trauma. While not yet a true "device," this research represents a true "neural prosthetic" since it can read neural signals "in front of" a nonfunctioning brain area, then "write" a corresponding signal "behind" the damage in order to bypass the damage while still providing the same function of the damaged brain area. With more development, such an Internal BCI may also be able to enhance function. Although we neuroscientists tend to shy away from the idea of "implanting" memories, this BCI may also be useful in improving the ability to learn and remember.

Other Internal BCIs are in development both for repair of the brain and as part of Input and Output BCIs. True bionic vision, hearing or the sense of touch and feedback necessary for prosthetic limbs will encounter many of the same challenges faced by Internal BCIs, and these challenges are not trivial. The Internal BCI must incorporate both the "reading" of brain information similar to Output BCIs, and the "writing" of information necessary for Input BCIs. In addition, the understanding of natural brain "codes" will be of benefit to true brain-to-computer links for command and control of external devices (and not just prosthetics).

The major challenges faced by all of these BCIs continue to be: (1) understanding the patterns of electrical signals that form information used by the brain, and (2) having the ability to record and stimulate neurons to produce those patterns. With current technology, it is very difficult to do so from outside the brain. For this reason, there is a lot of research into techniques and materials for creating the interface between brain cells and external electrical devices.

BCI Electrodes: Interfaces for recording and stimulating within the brain

Brain machine interfaces are important not just for "bionics" but also for interfaces that would allow quadriplegics and those with neurodegenerative diseases to interface with the outside world. In discussing those types of interfaces; however, it is necessary to first go into a bit more explanation about how neurons work. (I have explained neurons in much greater depth in an entry in my blog, found here, for anyone wanting more information.) In basic terms, neurons generate very small electrical signals - about 100 microvolts or about 10 nanoamps in current. It is quite easy to record these signals with very fine metal wires (electrodes) as long as we can get them very close to the neurons. Recording more neurons with fewer electrodes from greater distances is where it gets difficult. To get a neuron to become active on command requires a bit more voltage and current.

Given the ability to read electrical signals, one of the key questions in developing a brain machine interface then becomes understanding how the activity is combined or patterned: i.e. what is the nature of the (electrical) information that is to be interfaced? We need to know two things: (1) how much information do we want to input to the brain, and (2) what pattern does the brain expect to see? With a visual and auditory prosthetic, researchers and doctors utilize residual neural function in the eye and ear, respectively, and simply provide a pattern of electrical stimulation that is "mapped" in a manner similar to the normal input to retina or cochlea. Tactile and proprioceptive sensation, as required for an effective bionic limb, is a little bit more complex, since the inputs will likely need to be transmitted directly to the appropriate area of the brain that normally receives input from limbs. Likewise advanced improvements in visual and auditory prosthetics – the degree of sophistication required to truly replace sight and sound – will also need to provide inputs directly to the visual and auditory processing regions of the brain.

The second half, or second challenge, to developing BCI electrodes is in many ways much harder, even though it would seem to be the simpler problem. The type of electrode that is commonly used for recording neural activity is simply a wire – insulated along its length, with only a small area exposed at the tip. Sharpened tungsten electrodes may have an exposed surface of less than a micron, while stainless steel, nichrome, or platinum iridium wire typically have surface areas of about 20 to 50 square microns. Since the diameter of a single neuron is itself about 20 square microns, one would think that these electrode sizes would be adequate for recording single neurons. In fact, the larger surface area of nonsharpened electrodes is more appropriate for recording multiple neurons, requiring software that can separate the activity of more than one neuron. While smaller diameter electrodes would seem to be more precise, and more accurate, they do not remain effective as long as nonsharpened electrodes. The major problem with this type of electrode is that either the wire or the brain tissue itself can move, reducing the effectiveness of the electrode. Another problem is that the glial cells which provide metabolic support to neurons will tend to encapsulate and insulate foreign objects in the brain (this is termed "gliosis"). When this happens to electrode its ability to record neural activity is decreased and eventually eliminated. One way around the problem is to implant the electrodes outside of the brain, into the nerves that would otherwise connect to the limb being replaced by the prosthetic. These electrodes exist and are in testing, but there are additional difficulties in identifying which signals go where once we get outside the brain.

"Michigan arrays"

Image courtesy of NeuronNexus

A much more recent approach is to develop recording electrodes that resemble printed circuits, with recording surfaces, and structural materials, that are inert. Silicon and ceramic substrates, with printed platinum "wiring" provide many advantages for BMI. In the first place, silica and ceramic and platinum are much less likely to cause inflammation and gliosis. In the second place, the "printed circuit" process allows the exposure of only small regions of metal "recording surfaces" which can be manufactured in geometric patterns or grids that can record from or stimulate many neurons in many different areas and provides many orders of magnitude more information to be either recorded or stimulated. The "Utah Array" is one such array, while the "Michigan Arrays" represent a more classic printed circuit approach. It is still the case that implanted electrodes have a finite duration of function. We still don't know entirely how long electrodes of this type will remain functional when implanted into the human brain. Even the patients working with the bionic arm prosthesis will only have electrodes implanted into the brain for a few years. Research is ongoing to determine the "best" type of electrode that can remain functional for the lifetime of the patient.

Another form of "recording" has gained recent attention in which circuitlike electrodes are coated with chemicals that allow sensing of not just the electrical signals produced by neurons, but direct measurement of the flow of neurotransmitter chemicals between neurons. While most neuron activity is electrical, it is also partly chemical, and there is potential for chemical sensing to become as precise as electrical sensing. We do not yet know if these electrodes will last longer (or shorter) than electrodes with only electrical sensing capabilities, but the chemical sensing properties are very important in light of two new technologies that will be described in the next section.

Alternate Means of Interfacing to the Brain

In recent months I've seen developments that indicate that the field of neuroscience is not very far from developing effective bionics. Sometime in the next year, the first patient will be fitted with an upper limb prosthetic and will wear it for six months to a year. The means to record and decode the neural signals that control normal muscle movement have been around for at least two decades. However, it has not been until recently that we have risked implanting recording electrodes in humans to do this task. One of the great drawbacks of bionic interfacing is that we still don't have a very good technique for creating a two-way connection and allowing the patient to actually "feel" using a prosthetic limb.

One of the biggest drawbacks to the implantation of recording electrodes has been that they eventually become encapsulated by glial cells and scar tissue, the electrodes become less capable of recording, and the recordings become less precise. This is one of the major reasons why the patients who initially test our new bionic limbs will only have the use of them for very short period of time. However, some remarkable new techniques have been developed over the past several years which may make metal recording electrodes obsolete.

The first technique uses a system very similar to that which I reported previously, as a future direction for developing a prosthetic for the retina. Healthy retina cells contain a pigment called "rhodopsin" which changes its shape when exposed to light. The rhodopsin embedded in cell membranes of a "rod" or "cone" causes the cell to react to light by opening molecule-sized chemical channels and generating small electrical signals, much the same way neurons react to chemical and electrical stimuli. The new field of "optogenetics" inserts chemicals similar to rhodopsin (collectively termed "opsins") into cells to make them sensitive to specific wavelengths of light. In the retina, this technique can be used to rebuild the light-sensitive cells that have been damaged by disease. However, in other brain areas, it can also be used to insert the capability of stimulating cells using light instead of electricity. The advantage to using optogenetics as a means of providing an interface from the machine back to the brain, is that the fiber-optic implants used to present the light stimulus are much less likely to produce inflammation and lead to scar tissue formation and gliosis of the electrode. The disadvantage is that multiple electrodes using fiber optics do not yet exist, and the technique for inserting the opsins into brain cells is still subject to some controversy.

On the other hand, the second exciting new technique still uses light, but instead of requiring a specialized molecule inserted into the neuron, it relies on infrared wavelengths, which produce a very small amount of heat focused on single neurons. The amount of infrared energy required to activate a neuron is considerably less than what would produce a measurable heating of the tissue around the infrared light source. Thus, it may turn out to be possible to stimulate neurons using light, with or without specialized opsins. With these two techniques at our disposal, what is needed now is a better way to record neural activity, and many of the same scientists using both optogenetics and infrared stimulation are looking for alternative recording techniques.

Electrode-less Interfaces

Not all BCIs require a direct interface with neurons. Quite frequently in scientific literature, the interfaces based on electrodes implanted into are typically termed "brain machine interface" or BMI, while interfaces that operate "noninvasively" are called BCIs in contrast to the convention I have used in this article to call all interfaces BCIs. This is one reason why, when looking at the scientific literature, it can be a bit confusing to see reference to BMI versus BCI, without realizing that they are referring to essentially the same thing.

Noninvasive BCIs typically involve recording electroencephalograph information from the scalp, and using special amplifiers and processors to identify specific frequency components, and use that identification to drive a computer interface. The simplest such connection, is somewhat reminiscent of the "biofeedback" devices popular in the ‘70s. A biofeedback monitor is simply a single channel EEG amplifier connected to a filter for the "alpha" frequency waveform of EEG. Using a technique called fast Fourier transformation, the relative power or quantity of waveforms that fall in the alpha frequency band between 8 and 12 Hz is represented with a simple analog meter. The alpha frequency is associated with meditation, relaxation, and quiet concentration. The commercially available device from NeuroSky was originally designed to measure increases and decreases in alpha frequency, and provides a simple on-off switch for computer interface. Their current devices measure five different frequency ranges, and can produce differential outputs to a computer based on the ratio of EEG frequency. Like many brain machine interfaces, an EEG frequency-based BCI requires the user to train themselves to alter their EEG frequencies. As with biofeedback training, users learn how to produce active and quiet EEG through a process very similar to meditation.

More sophisticated BCI's are being developed, that use more than just the frequency of EEG to drive a computer interface. Increasing sophistication of a device such as NeuroSky, and the BCI2000 system pioneered by Gerwin Schalk, have begun to take multiple channels of EEG and look for the much finer detail which represents movement intention, and focused attention. The two processes are very similar. Research in the 1980s and ‘90s demonstrated that prior to an actual limb movement, areas of the brain involved in planning of movement became active several seconds before the movement. By mapping this activity, researchers could correlate the neural activity with the actual muscle activation, and control a robotic arm by "thought" alone. Such a finding is very important for developing a prosthetic to replace an amputated limb, since prosthetic movement needs to be controlled by the "intention" to move. On the other hand researchers also found that by focusing one's attention on the type of movements that they wish to make, they can also correlate brain activity with movement.

Difficult as it was to perform these analyses with electrodes implanted directly into the brain, it is even more difficult to select the information-containing components from EEG recorded from outside the skull. The more distant an electrode is located from the neural activity that it is to record, the weaker the signal, and the more noise or unrelated information can be recorded at the same time. EEG is a form of recording known as "volume conduction" in which the sum of all of the neural signals from a large volume is combined onto each electrode. The intervening skull and scalp also contribute to attenuation of the signal from specific brain areas. The best way to correct for these effects is to use multichannel EEG in which a dozen or more electrodes are placed onto the scalp, and signals can be localized by comparison between pairs of electrodes with different spatial orientation with respect to the brain area being recorded. While this enables a finer detail and recording, it still does not compare to the detail that can be obtained with implanted electrodes. Neuroscience as a field is quite familiar with this problem. It is typically referred to as "the inverse problem" in that it requires reverse engineering the inputs (neural activity in specific brain areas) from the EEG output. It is a computationally intensive effort that has not yet been solved. However in the interim, devices such as that produced by NeuroSky and analysis platforms such as BCI 2000 to allow for derivation of information from the EEG Tech and be used to drive a computer or device.

Science, Science Fiction and BCIs

Brain computer interfaces of all types have application not only in bionics and prosthetics, but in providing communication and device control for quadriplegics and those suffering from debilitating neural diseases such as ALS. They are an important component not only of neuroscience but of rehabilitative medicine, and have the potential to teach us much more about the brain, than we have already learned in the process of developing these devices. Thus, the current state of the art in bionics, prosthetics, and brain machine interfaces is still fairly crude. This is not to say that it is not effective, or that it does not provide sufficient information to drive a prosthetic or an interface. It is simply that we are still a long way from the "Six Million Dollar Man" ideal of an interface directly from machines to the brain and from the brain to machines.

So what will it take to get to Brain Ships and bionic individuals? The simple answer is time-plus-money, but the more realistic answer is that the field needs more breakthroughs in means of recording from and stimulating neurons without wires. In The Ship Who Searched, there was little doubt that Hypatia Cade's "shell" and Doctor Kenny's "half shell" were extensively wired into the patient. SF in general has been much less squeamish about the idea of wires and electrodes than the general public, but then the advantage of writing (and reading) SF lies in not having to worry about gliosis and the useful lifespan of wires in the brain!

The wireless BCIs of James P. Hogan's The Genesis Machine or Realtime Interrupt represent the ultimate goal of neural prosthetics –- inductive or "noninvasive" connection between instrumentation and neurons. Current research using optical interfaces (optogenetics and infrared) have shown some ability to both record and stimulate, but often do not have the "bandwidth" to convey much information. Refinements of both our electronic and optical capability will certain accelerate progress. Other approaches, using magnetic resonance (similar to MRI machines) or direct measurement of minute magnetic fields may also lead to substitutes for "wiring" of electrodes into the brain. There is certainly a lot of information available either on the surface, or immediately outside the body, that could be read if we had the technology. Electroencephalography reads signals from the scalp that represent all of the activity in the brain. Unfortunately, it truly is all of the information at once, and we do not yet have the means of calculating just which portions of the EEG correspond to each desired brain area. Fortunately, this is primarily a matter of computer and information processing, and we twenty-first century humans excel at that!

How far are we away from true BCIs? From shellpersons? From the full-body interface of The Ship Who Searched? For that matter, virtually every "advanced" technology of the past century has turned into entertainment (laser cat toys, virtual reality, computer games, 3-D TV, wireless networks, smartphones, etc.) – so how far are we away from direct neural interfaces for entertainment? The first wearable bionic limbs are probably being tested even as I write –- and you read –- this article. I predict we will see the same time-scale for prosthetic limbs that we have seen with cochlear and retinal implants, that is, we will see fewer than one hundred patients over the next ten years, but hundreds of thousands over the next forty years.

Advancing beyond single limb prosthetics to a half-shell (exoskeleton) or a full shell prosthetic will require much more than the interfaces described in this article. A life-support shell will have to do just that – support the life of the subject but I predict we will have that capability before the end of the century. Broadband full-sensory interfaces will likely develop in parallel with life-support shells, but I predict they will probably take about 25 percent less time, since an interface will not require the life support of a shell. Thus, a half-shell or full-body interface will likely take about 70 years starting from current technology. Of course, as with any good SF, a radical scientific breakthrough would certainly trash my time-scale and speed things up!

I know the gamers among you are still wondering when we can use direct neural interfaces to play Halo 10! Well, the truth is that brain-controlled interfaces for computer "games" already exist in the form of the NeuroSky and Mattel Mindflex interfaces. In 2010, musician Robert Schneider created his "Teletron" to synthesize music using a BCI. BCIs as computer and game control devices are already here; however, inputs back to the brain may take a bit longer. The first sight and sound inputs will likely be reminiscent of Atari graphics, but my prediction is that we will start seeing BCIs for entertainment in 20-30 years.

Science fiction is around us every day. Neuroscientists are probably halfway to the technology required for BrainShips and Cyborgs. Fortunately for those of us in the field, we have both the optimistic and cautionary tales of SF to guide us. Once we accomplish these goals, we'll just have to rely on SF to establish the next set of goals – a bit further out!

Copyright © 2023 by Robert E. Hampson

Dr. Tedd Roberts, known to many science fiction fans as "Speaker to Lab Animals," is a research scientist in neuroscience. For the past thirty years, his research has concentrated on how the brain encodes information about the outside world, how that information is represented by electrical and chemical activity of brain cells, and the transformation of that information into movement and behavior. He blogs about his work here.